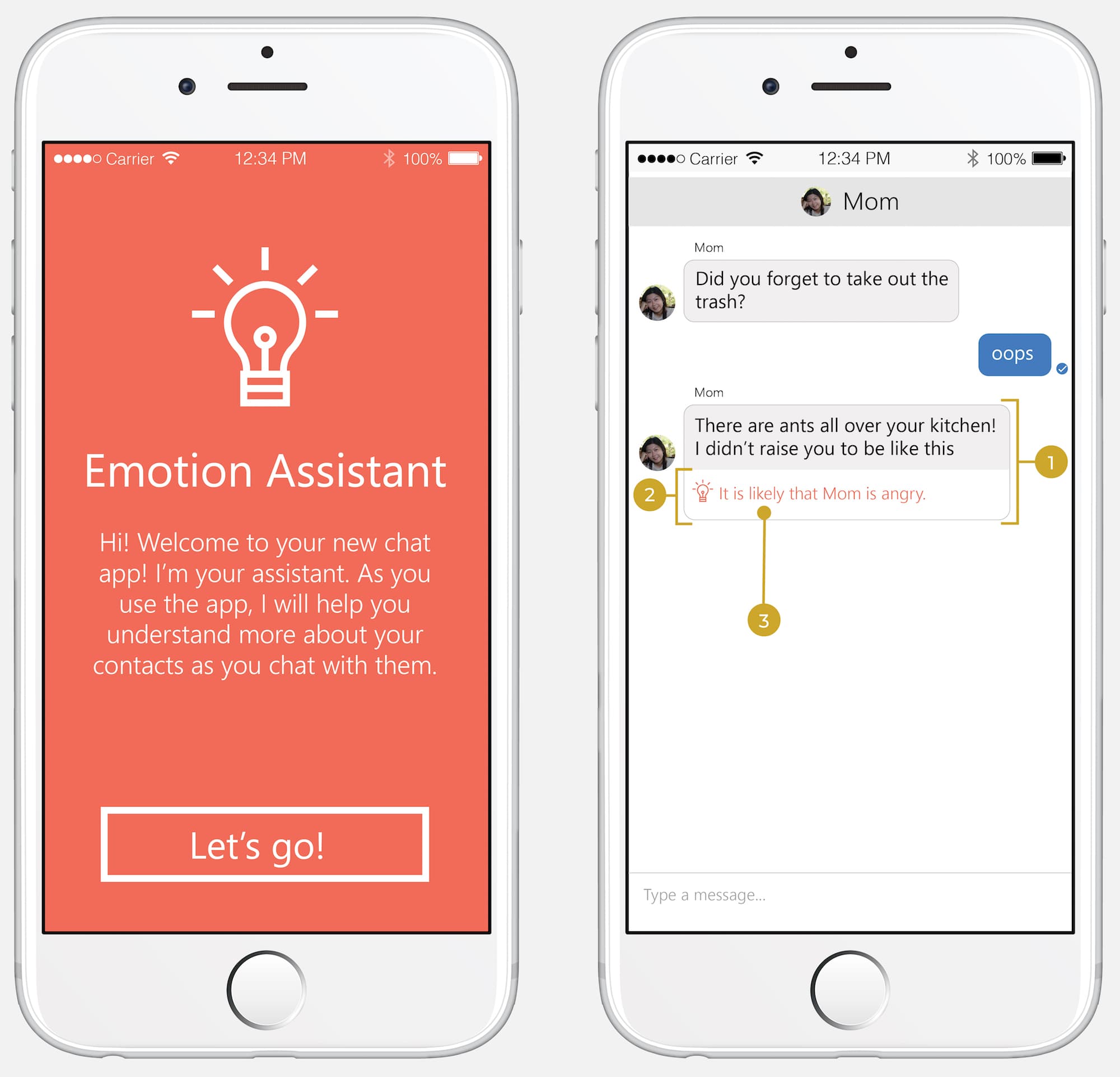

EQ Assistant is a chat assistant that uses Machine Learning and Artificial Intelligence to surface emotions in text messages to help people communicate more effectively through reducing misunderstanding.

Have you ever mistaken a short text response from a friend as anger or indifference? We lose so much information about emotion through text messaging. For many people, however, text-based messaging is a preferred and primary way of communicating with others. Modern text communication needs to evolve to fill in these gaps that can lead to misunderstanding and potentially result in stress and problems with relationships.

Our combined research led to 8 principles that influenced the first iteration of EQ Assistant:

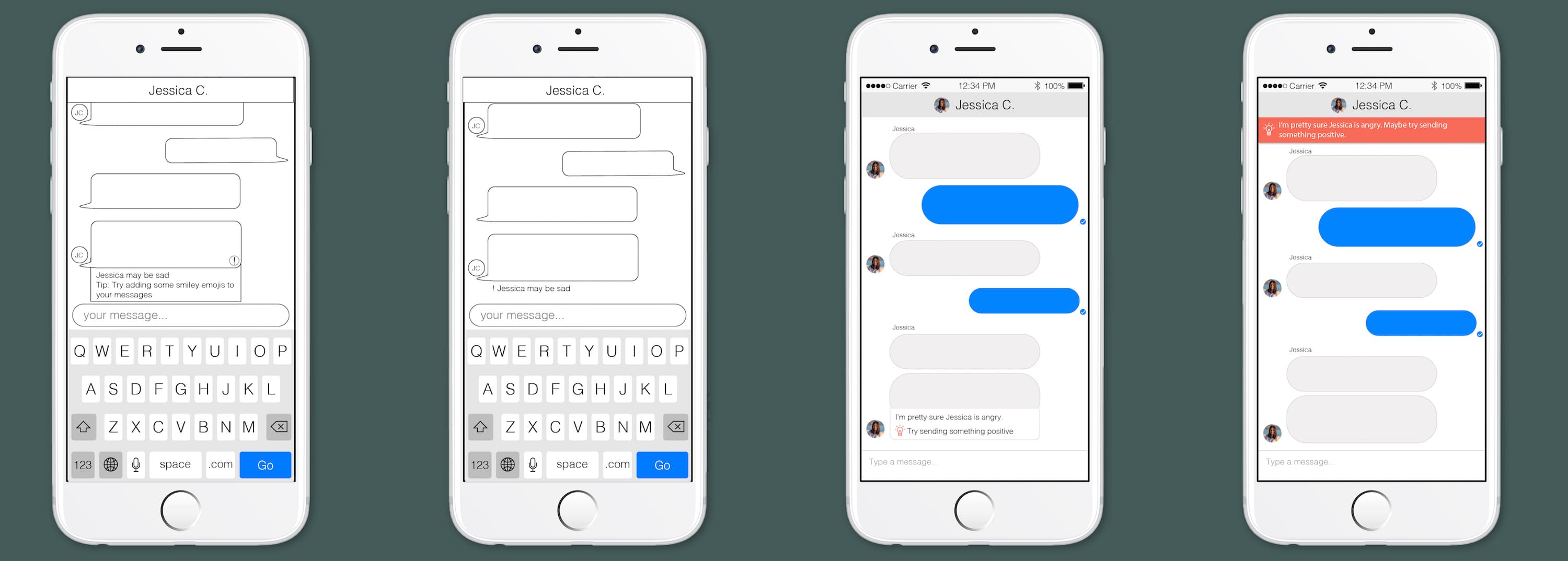

Using our research findings, I created sketches of possible solutions for EQ Assistant. I explored different types of systems (e.g. platform, app, plugin), as well as a variety of visual treatments, voice, and feedback UX.

Together, we chose a design direction and created a prototype for testing that leveraged our learnings from research.

We wanted to understand usability and likability of our design, so we conducted a usability study using paper prototypes. I developed a set of interview questions aimed at understanding perceptions toward EQ Assistant as a communication tool. We ran our study in-person with 7 participants.

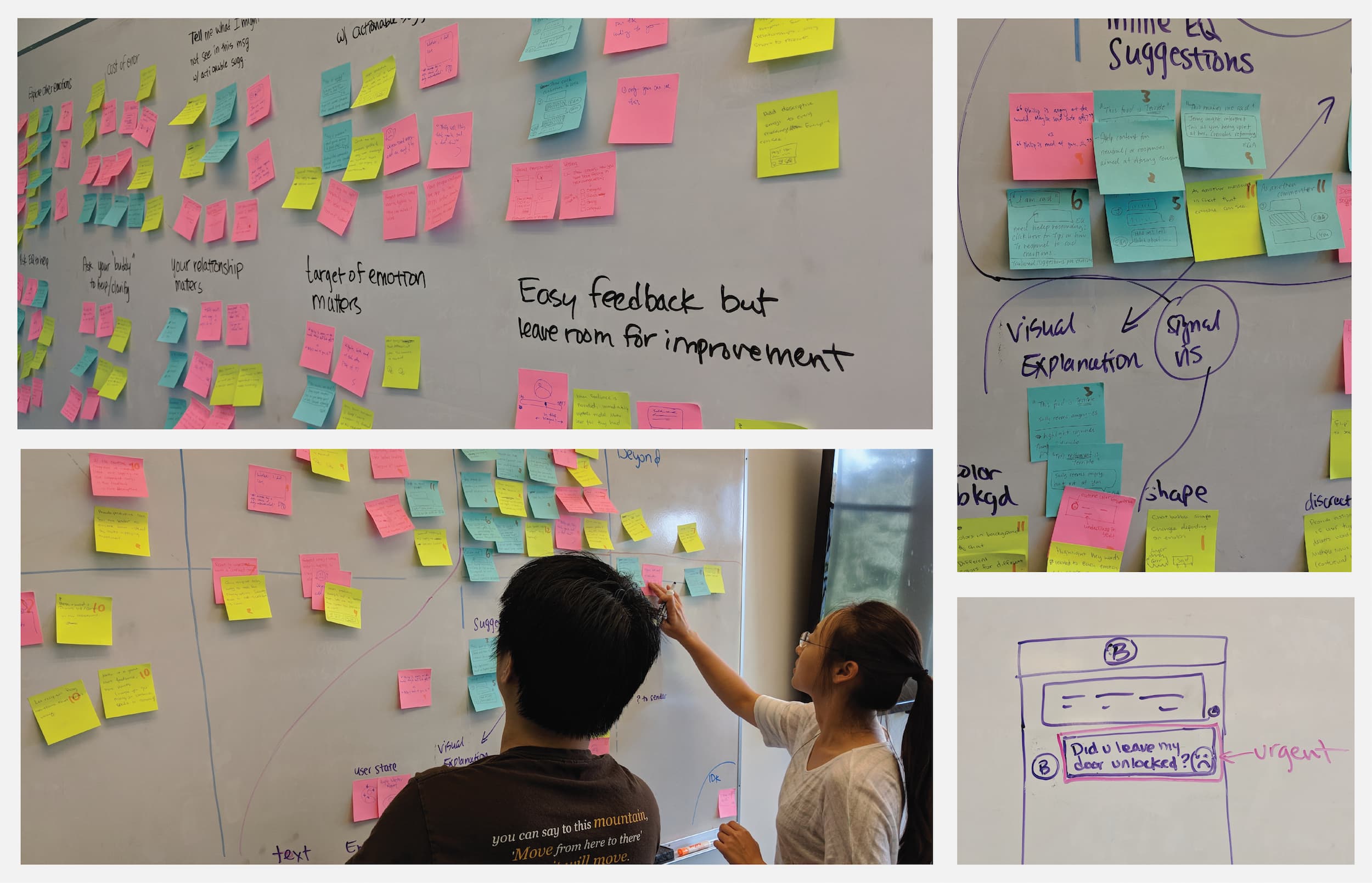

Once the study was complete, our team worked together to analyze the results using qualitative coding and affinity diagramming.

Our research helped us see the gaps that existed in our first design. We took our findings and did another round of ideating, affinity diagramming. I then did a round of sketching and prototyping to create a new iteration of EQ Assistant.

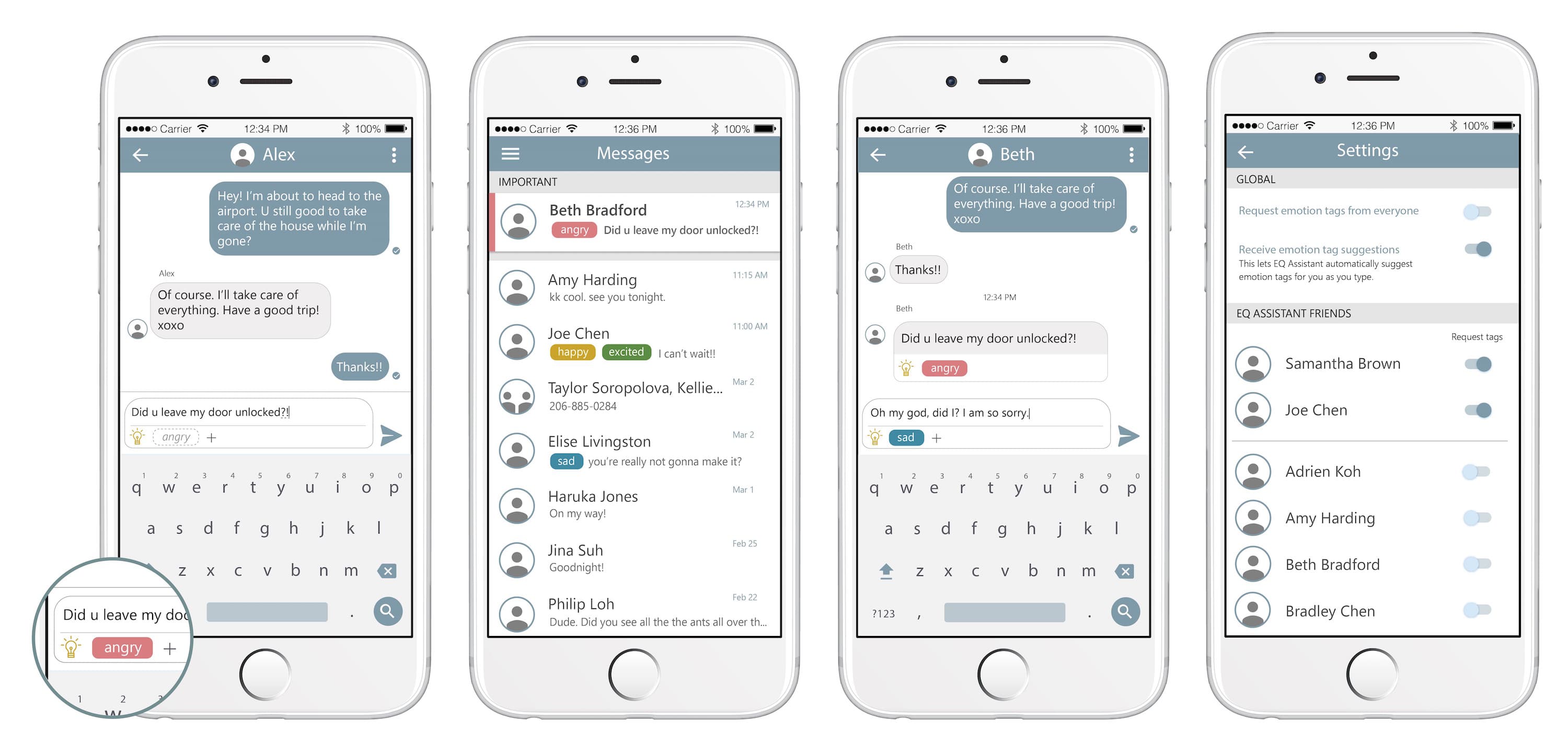

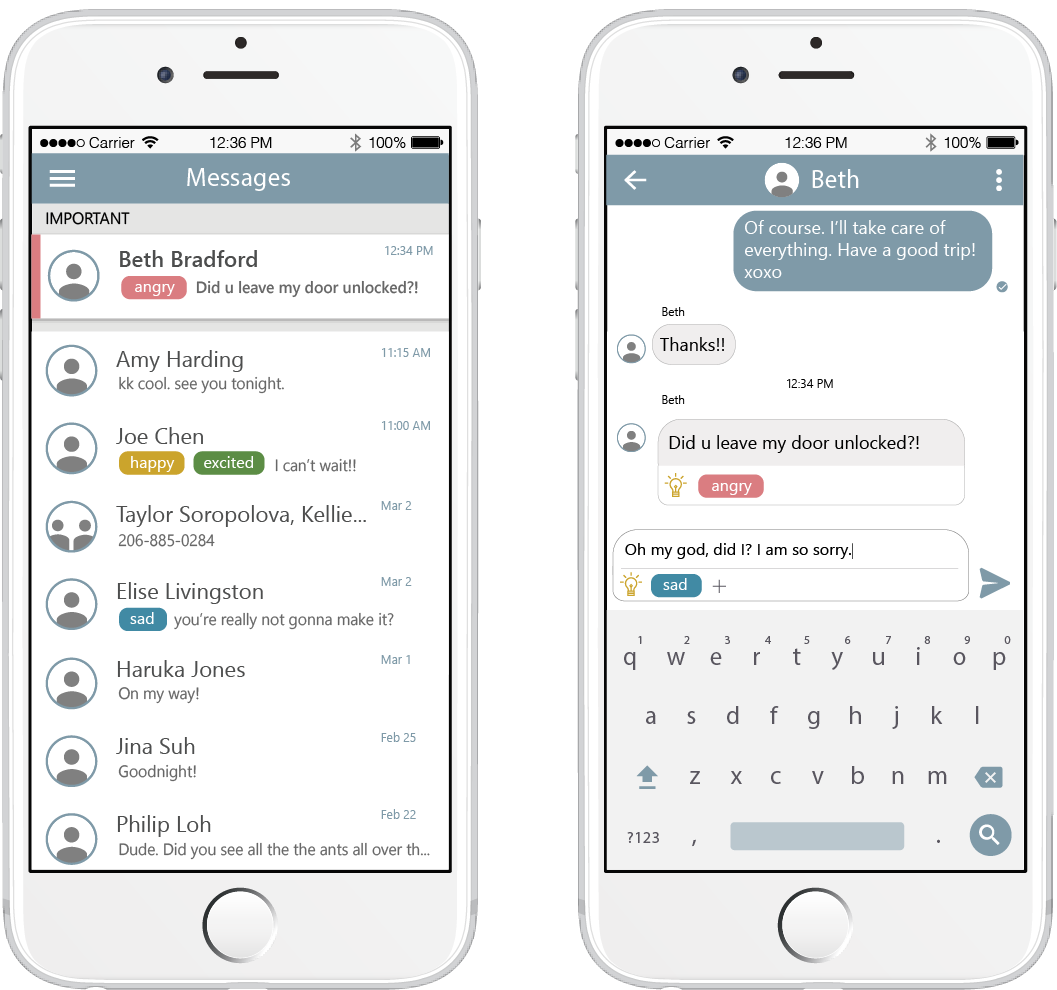

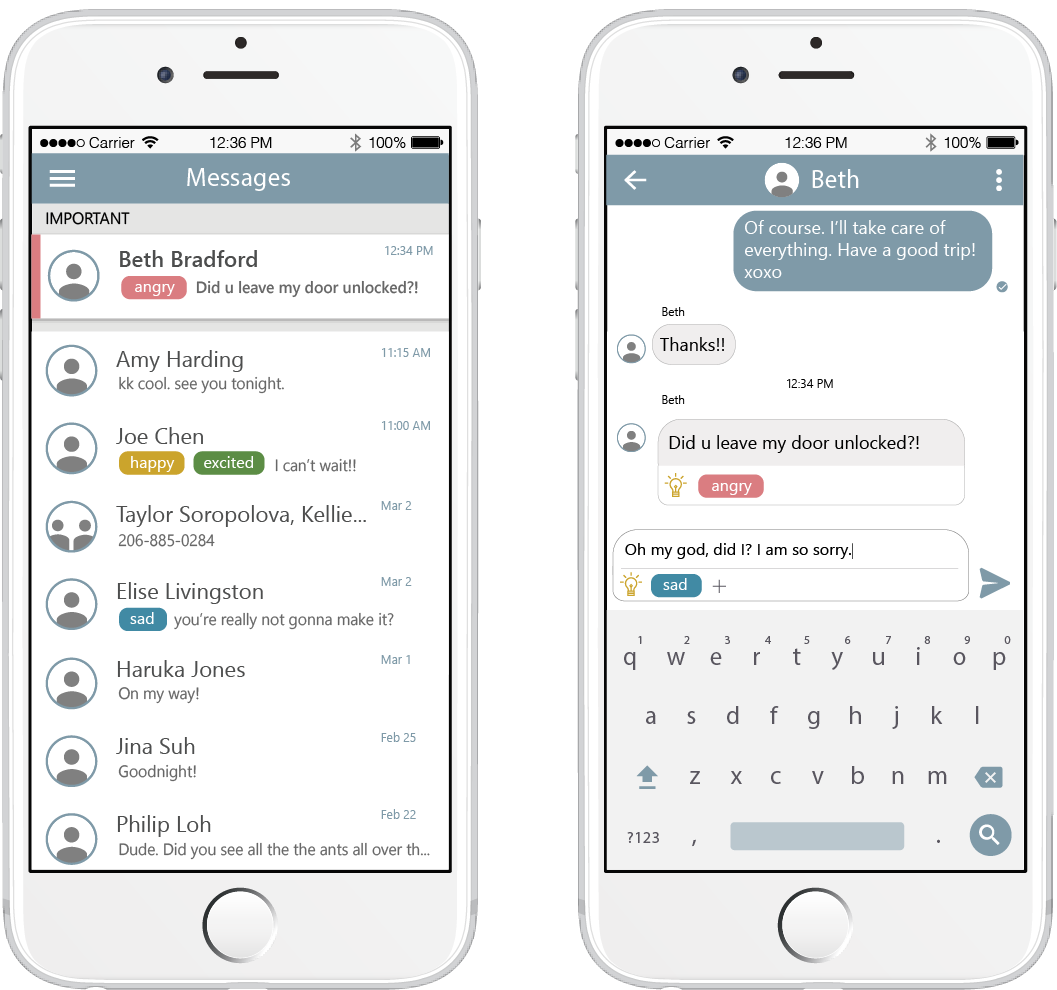

In our final design, we give control to the sender, allowing him or her to add machine suggested tags to messages to aid in clearer communication. Tapping on a suggested tag to confirm it acts as an integrated feedback mechanism.

We also triage messages based on emotional urgency, helping to make the tags more actionable. Users can adjust how emotion tags work for each person they communicate with, allowing for unique treatment per unique relationship.